Introduction

Application logging is a critical part of log management and can help keep businesses running smoothly. If you don’t have any proper logging records, it is difficult to identify the events, and it will also consume a lot of time in troubleshooting. That’s why application logging is essential for optimising, monitoring, and troubleshooting your integration solutions. And due to the limitations of the cloud hub, it is difficult to track or monitor the logs after a certain period.

To overcome this, organisations must opt for log aggregation tools. To simplify this process, we have designed a service to publish the logs generated by the Mule application to log aggregators like ELK and Splunk.

What is the Log Aggregation Service?

The log aggregation service was designed to publish the logs generated by the Mule application to log aggregators like ELK and Splunk.

- It is a rest-based service that listens to and publishes the logs to ELK or Splunk.

- Supports different formats to publish the logs.

- Publish the logs based on indexing.

Log Aggregation Utility

Setting up Splunk

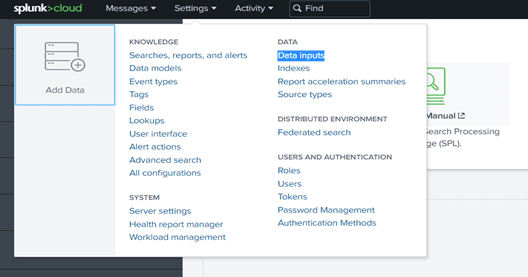

1. Once you have configured your Splunk, the first step is to create the data inputs. Splunk supports different data inputs. Navigate to Settings -> Data/Data Inputs and configure the data input you want.

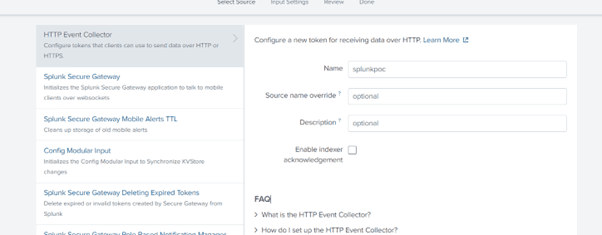

2. Create a new HTTP Event Collector by providing the below details.

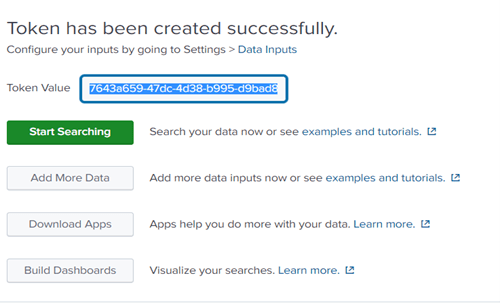

3. A token will be generated for HTTP Event Collector, and you need to use this token to publish the logs to Splunk.

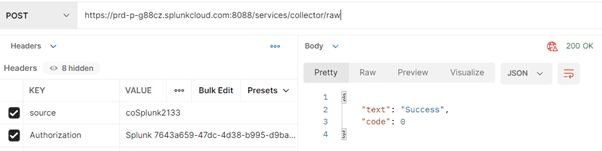

4. Using the generated token, you can publish the logs to Splunk by configuring endpoint details like below.

- You need to services/collector/raw or services/collector/event in order to create an entry in the Splunk.

- And configure the source details; it acts as an index in Splunk.

Log Aggregation Utility Service

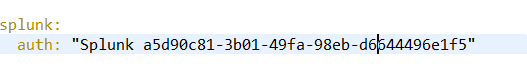

1. Once you have setup your Splunk configuration, you need to configure the below properties in the log aggregation service.

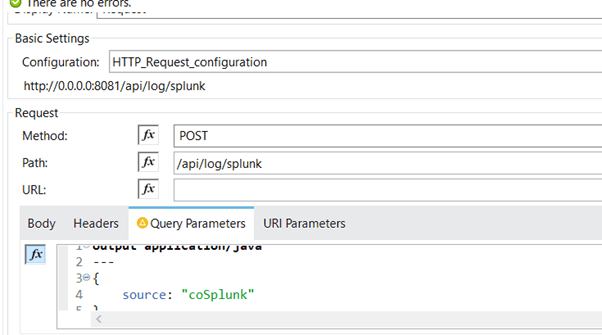

2. Configure the http request endpoint details of the log aggregation service, publish the logs of your application, and configure the source in the query parameters.

3. Once the call is generated, the log aggregation service will publish the logs to Splunk, and the same can be viewed on the Splunk dashboard.